Rules Engine

For most of the customers, it often becomes imperative to execute a set of rules to decide on an outcome. In many cases, customers will need the rules to be executed based on their own data and in a non-sequential manner. This basically means that whether a rule should be executed or not depends on the outcome of one of the predecessor rules. This gets even more complicated when the data set has dependent rules that need to be executed only when certain conditions are met.

All these will require a novel approach that can cater to the disparate needs of customers, while providing an easy way to configure rules, even by citizen developers.

“Rules Engine” is such a framework that helps us create a workflow for easy configuration of master set of rules and execute some or all of them, for each of the clients. While this can be used for general purposes for various clients operating in different domains, this was specifically deployed into production for a financial client, that required pre-clears to be approved or denied for their employees based on complex set of client-specific rules.

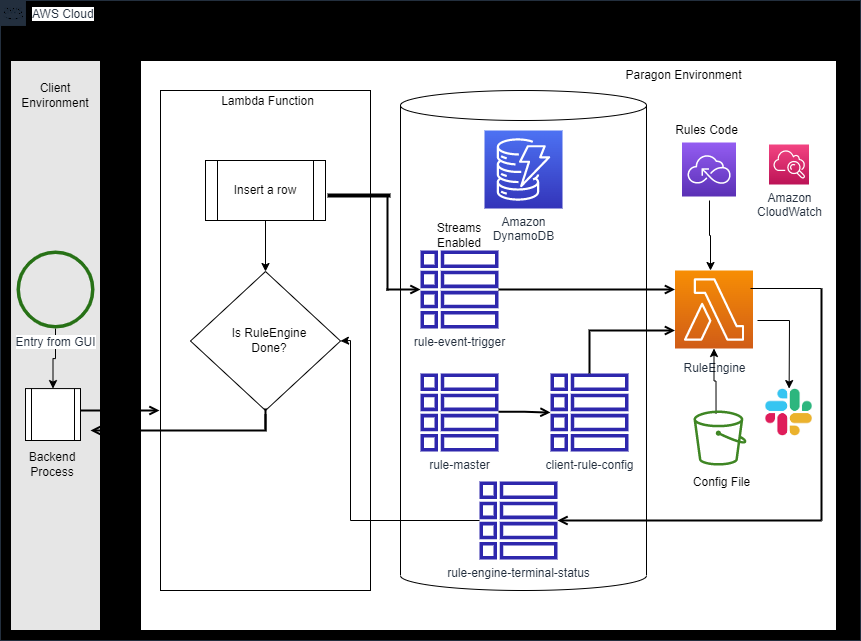

A completely serverless approach was used in designing the rules engine. While AWS was chosen to host this, a cautious approach was adopted to easily migrate to other CSP’s, such as Microsoft Azure.

Within AWS, DynamoDB hosts the tables needed to process the logic, with one of the tables enabled for streams, so when a client process inserts data into this table, a lambda function automatically gets executed. One of the tables, that holds all master set of rules for all clients, has the name of the lambda function for each rule. Various rules can be written in different languages (node/python/java etc.) as well, so development team is not tied up with a specific technology.

There is another client-specific table, that holds the set of rules that need to be executed for that client. This table also holds information of a client-specific JSON file that has all input values, expected output, the action to be taken based on outcome (whether to move to next rule, OR to call a specific rule, OR to mark the process as complete etc.). This JSON file is hosted in S3, which can be modified and uploaded by citizen developers, based on changing requirements, without need for developers to make any coding changes.

When a client process (such as java) inserts a row into a trigger table, a lambda function holding the core Rules Engine logic will be triggered, which executes the rules one by one. It reads the configuration from the related JSON and based on the outcome of rule, decides which rule to call next OR whether to return with success or failure message. In case, it must execute other rule (the name of the rule to be executed next can also be specified in the JSON file), it inserts another row in the trigger table, thus repeating this whole process. So, this is all executed asynchronously, without need of a loop, for efficiency reasons.

In case, the requirement is to execute this synchronously, there is a switch that can be passed by the calling program. Here, the calling program can specify the list of terminal values that decide on the completion of rules engine and the process returns control to the calling program on reaching one of the terminal statuses. This way, we can run this in synchronous or asynchronous fashion, depending on the need of the client.

Each client’s requirements can be specified as pipe-delimited parameters in the table, that decide the level of nesting required in the JSON file, to get client-specific data for that rule.

Once we code for the master set of rules that are required by any client, rest of the process becomes as easy as configuring them in the table and JSON file, which can be done on the fly. The rules are written to provide standard logging information, which is available through CloudWatch, for easy debugging and actions. CLI commands were created to gather this information from AWS, without having to login to console. Similarly, CLI commands were created to re-run the process, without need for a calling program to invoke it, through API’s. These may be required in case support team needs to re-run the program, OR if they just want to know what the outcome of set of rules for a client will be.

This Rules Engine is designed to run from a central server, wherein it will get client credentials (for client DB), using AssumeRole, by connecting to client’a database and getting the values from Secrets Manager in its own environment, so the secrets are not duplicated in central environment and moreover the process runs securely. Authentication was built-in the lambda functions and at API level, so only authorized programs can invoke Rules Engine. Cloud Watch alerts were set up to alert the team (via Slack and email), in case the rules run for more than pre-specified period (5 seconds in one case).

This entire process and the secure mechanism built around that will make this Rules Engine flexible, extensible, secure, serverless and portable.